An animated DNA molecule from ‘Jurassic Park’.

Recent developments in nanobiotechnology can bring to mind science fiction, but STS teaches us to go beyond thinking of the dangers of these technologies in terms of “escape” and “loss of control.”

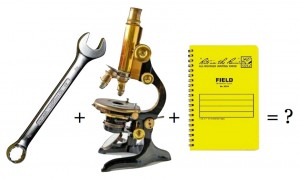

At the Wyss Institute for Biologically Inspired Engineering at Harvard University, researchers have developed robotic devices made from DNA which they hope will eventually be used to treat various diseases (1). Modeled after the white blood cells of the human immune system, these nanobots are designed like little trucks, conveying “molecular messages” to diseased cells to tell them to commit suicide. In other words, the nanobots search and destroy targeted human cells of the designer’s choice. In an interview with Twig Mowatt for the Harvard Gazette (February 12, 2012), principal investigator George Church pointed out that these nanobots are a major breakthrough in DNA nanobiotechnology research. When I read this article all I could think was, “Didn’t anyone at the Wyss Institute see The Terminator? Jurassic Park?? The Matrix???”

Science fiction is replete with stories in which the melding of biology and technology has had catastrophic consequences. The idea that nanobots, or similar cybernetic technologies, might depart from their human-designed conduct and develop some form of intentionality, akin to viruses, has inspired some of the most frightening stories. Viruses target cells and take over their metabolic processes for a time, and then destroy them. In fact, the primary difference between nanobots and viruses is that the nanobots are designed to destroy targeted cells immediately. If we are to take advances like those discussed in the Mowatt article seriously, as only an additional step on a path of ever-improving nanobiotechnologies, it is quite possible that rogue nanobots could potentially be even more dangerous than highly infectious viruses.

The discovery of DNA in the mid-20th century radically altered scientists’ conception of nature, and since that time biology has been increasingly reduced to DNA. With the development of nanobots, DNA has become the fundamental building block for life and for machines. The amino acids that were used to create the nanobots are the same ones that form DNA fragments, which bind to form the genetic code for all living things on this planet, and arguably many of their traits and behaviors as well. Researchers and reporters emphasize that nanobots have great therapeutic potential because they are “biocompatible” and “biodegradable.” Yet, functionally, they straddle the line between biology and information, which is what makes them economically as well as therapeutically valuable.

Donna Haraway has written, “engineering is the guiding logic of life sciences in the twentieth century” (2). Arguably, the revolutionary moment in the life sciences of the 20th century was not the discovery of the structure of DNA per se, but rather the re-envisioning of nature as systems driven by DNA. Much like Foucault in his classic text, The Order of Things, Haraway sees the development of the life sciences and technologies in the 20th century through lenses that reveal scientific breakthroughs to be the products of cultural history and political economy in ever-shifting relations. In the early 21st century, Haraway argues, these relations are increasingly cybernetic interventions that are as profitable for the health industry as they are promising for the people with diseases.

STS scholars might re-read the Harvard Gazette article with these analyses in mind. Situating new discoveries in the health industry in relation to political economy and cultural history, one might read Mowatt’s version of the DNA nanobot story as one in which the belief that humans can control nature trumps the belief that we do not. Thinking about the DNA robot story in Haraway’s terms encourages us to shift our attention from the language of “major breakthroughs” and “implementation obstacles” to a series of questions the article does not ask about the use and misuse of DNA based technologies for human ends. What does it mean morally and ethically to transition from DNA as the building block of biotic life to DNA as the building block of biotic and abiotic life? Who decides what the best uses for DNA-related technologies are, and what might the biological sciences look like if they were not driven by corporate interests? If DNA is a key driver of natural systems and also the point of human intervention, are novel discoveries and financial gains (like those in nanobiotechnology) worth possible risks to humanity (like those envisioned in science fiction)?

In the Harvard Gazette article about DNA nanobots, just as in the beginning of Jurassic Park, there is no mention of unforeseen consequences and possibly problematic outcomes. Instead, the “right” combination of biology and technology begets a perfectly functioning, closed system in which human-made and human-controlled robots kill only cancer cells. Cancer patients live, companies profit. This clean vision should arouse suspicion: the dinosaurs do escape from Jurassic Park, and in The Terminator and The Matrix human-created sentient technology develops its own ideas about what its existence should be about. But Foucault and Haraway, and STS more generally, teach us to go beyond thinking about the dangers of these technologies simply in terms of “escape” and “loss of control.” They inspire us to ask additional questions informed by the knowledge that science is always already a part of a constantly changing social world: What if the next step at the Wyss Institute was to enable these nanobots to read all genetic code inside the body, and to self-determine what should be destroyed so that they can fix not only cancer but any other “problems” they find? What then?

Keywords: biotechnology; genetic engineering; machine life

References:

- Mowatt, Twig. “Sending DNA robot to do the job.” Harvard Gazette, February 12, 2012.

- Haraway, Donna J. Simians, Cyborgs, and Women: The Reinvention of Nature. New York: Routledge, 1991: 47.

Further Reading:

- Benjamin, Ruha. People’s Science: Bodies and Rights on the Stem Cell Frontier. Palo Alto, CA: Stanford University Press, 2013.

- Eribon, D. Michel Foucault. Betsy Wing (translator). Cambridge, MA: Harvard University Press, 1991 [1989].